To Normalize How Data

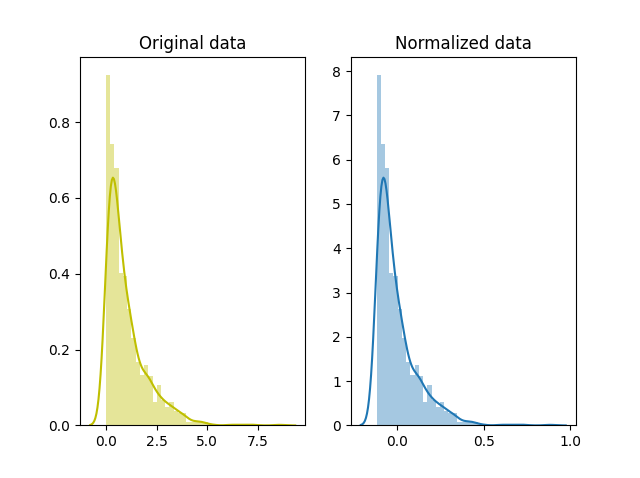

Right here, normalization does not mean normalizing facts, it means normalizing residuals with the aid of remodeling statistics. so normalization of information implies to normalize residuals the usage of the methods of transformation. Some of the extra commonplace approaches to normalize statistics encompass: transforming records the usage of a z-rating or t-rating. that is normally known as standardization. within the full-size majority of cases, if rescaling facts to have values between zero and 1. this is commonly referred to as characteristic scaling. one feasible formula to acquire Normalize a dataset by means of dividing each records point via a steady, together with the standard deviation of the records. in [4]: statistics = apple_data [ 'aapl_y' ] data_norm_by_std = [ number / scipy. std ( data ) for number in data ] trace1 = cross.

This means that normalization in a dbms to normalize how data (database control system) can be carried out in oracle, microsoft sq. server, mysql, postgresql and every other kind of database. to carry out the normalization technique, you start with a difficult idea of the records you want to keep, and follow certain policies to it so that you can get it to a greater efficient form. In this text we can learn how to normalize statistics in r. it will involve rescaling it among 0 and 1 and talk the use and implication of the consequences and why we do it. we can use a pattern dataset on height/weight as well as create out very own characteristic for normalizing statistics in r. Databasenormalization is the manner of structuring a relational database [clarification needed] according with a sequence of so-referred to as everyday forms so that you can reduce statistics redundancy and enhance records integrity. it become first proposed through edgar f. codd as a part of his relational model.. normalization entails organizing the columns (attributes) and tables (relations) of a database to make certain that.

Database normalization: a step-by means of-step-guide with examples.

How to normalize in excel. in information, "normalization" refers to the transformation of arbitrary facts right into a preferred distribution, normally a ordinary distribution with an average of 0 and variance of one. normalizing your statistics lets in you to examine the consequences of different factors to your enterprise with out regard. Database normalization is the method of structuring a relational database [clarification needed] according with a series of so-known as everyday forms a good way to reduce records redundancy and enhance information integrity. it become first proposed by edgar f. codd as part of his relational model.. normalization involves organizing the columns (attributes) and tables (members of the family) of a database to make certain that.

Database Normalization A Stepby Means Ofstepguide With Examples

In records and applications of records, normalization can have a range of meanings. inside the most effective cases, normalization of ratings way adjusting values measured on exclusive scales to a notionally commonplace scale, often previous to averaging. in greater complicated instances, normalization may discuss with greater state-of-the-art adjustments where the intention is to bring the entire possibility. 2d normal shape (2nf) meet all the necessities of the primary ordinary form. get rid of subsets of records that follow to more than one rows of a table and area them in separate tables. create relationships between these new tables and their predecessors through the use of foreign keys. Commands. step 1: pick out the minimal and maximum values become aware of the smallest and largest numbers within the authentic data set and represent them with the variables a and b, respectively. tip: in case you are normalizing a fixed of facts in which the smallest variety is 25 and the biggest range is 75, set capital. While you're trying to normalize a set of facts, you want additional pieces of facts. imagine you've got some statistics running from mobile a2 to cellular a51. earlier than you normalize records in excel, you want the average (or "mathematics imply") and widespread deviation of the records.

This means that normalization in a dbms (database management system) may be carried out in oracle, microsoft sq. server, mysql, postgresql and every other type of database. to carry out the normalization system, you start with a tough idea of the statistics you need to keep, and follow sure policies to it on the way to get it to a greater efficient shape. In facts, "normalization" refers back to the transformation of arbitrary data into a fashionable distribution, usually a ordinary distribution with an average of 0 and variance of one. normalizing your facts permits you to compare the outcomes of various factors in your enterprise without regard to scale: the highs are excessive and the lows are low.

How to normalize statistics howcast.

of absence of communication the telephone is get better records from samsung galaxy with broken screen» lots of us were via hassle like cracked display, however you can nonetheless perform a little simple and correct emergent handlings before send the cellphone to be constant, so you can recover the belongings you want from the broken device then permit’s see a way to normalize you samsung galaxy s8/s7/s6/s5, note A way to normalize data in excel, tableau or any analytics tool you use the concept of records normalization is one of the few standards that come up time and again again for the duration of your work as an analyst. this concept is so important that with out completely understanding it’s significance and programs, you’ll never be triumphant as an analyst. One manner to show an average device mastering version into a good one is through the statistical method of normalizing of information. if we don't normalize the facts, the system getting to know set of rules may be dominated by using the variables that use a larger scale, adversely affecting model performance.

stains, fluorescent probes and a label-free approach to demonstrate how downstream qualitative and quantitative facts effects, including mobile counting and unit volume Normalizeddata is a loosely defined time period, but in maximum instances, it refers to standardized facts, where the records is converted using the suggest and preferred deviation for the complete set, so it finally ends up in a general distribution with an average of zero and a variance of 1. In any other utilization in facts, normalization refers to the creation of shifted and scaled variations of records, where the intention is that those normalized values permit the comparison of corresponding normalized values for exclusive datasets in a manner that eliminates the consequences of certain gross affects, as in an anomaly time series. a few styles of normalization contain simplest a rescaling, to reach at values relative to a few length variable.

Normalization Facts Wikipedia

About normalized facts. the phrase “normalization” is used informally in information, and so to normalize how data the time period normalized records may have more than one meanings. in maximum cases, while you normalize facts you dispose of the devices of measurement for information, allowing you to extra without difficulty examine records from specific locations. Tip: if you are normalizing to the range among 1 and 10, set a will identical 1 and b will equal 10. step three: calculate normalized cost calculate the normalized price of any wide variety x in the unique data set using the equation a plus (x minus a) times (b minus a) divided by means of (b minus a).

Normalization is a design approach this is widely used as a manual in designing relation database. academic for first normal shape, second regular shape, third everyday form, bcnf and fourth everyday form. Eventually, facts normalization consolidates records, combining it into a miles extra organized structure. don't forget of the country of huge records today and how much of it consists of unstructured facts. organizing it and turning it right into a established form is wanted now more than ever, and to normalize how data data normalization facilitates with that effort. $begingroup$ @johndemetriou won't be the cleanest solution, but you can scale the normalized values to do that. if you want as an instance variety of 0-a hundred, you just multiply every variety by using a hundred. in case you need variety that isn't beginning with zero, like 10-one hundred, you will do it by way of scaling by the max-min and then to the values you get from that just adding the min. In case you want to normalize your statistics, you could accomplish that as you endorse and genuinely calculate the following: $$z_i=fracx_i-min(x)max(x)-min(x)$$ where $x=(x_1,x_n)$ and $z_i$ is now your $i^th$ normalized information.

Cohort analyses like the one shown in the picture beneath will via their very design, normalize for time however maximum other statistics visualizations received’t and also you’ll want to perform a little greater work. the desk above suggests a cohort desk with every row representing a set of x with every column representing a term (day, week, month). Normalization is to normalize how data a way frequently implemented as a part of data preparation for system mastering. the purpose of normalization is to alternate the values of numeric columns inside the dataset to a commonplace scale. Normalization is the process of correctly organizing records in a database. there are dreams of the normalization technique: getting rid of redundant records (as an instance, storing the same information in more than one table) and making sure information dependencies make sense (best storing associated information in a table). each of those are worth desires, as they reduce the amount of space a database consumes and ensure that. Normalizedata in a vector and matrix by computing the z-score. create a vector v and compute the z-rating, normalizing the records to have mean zero and standard deviation 1. v = 1:5; n = normalize(v) n = 1×five-1. 2649 -zero. 6325 0 zero. 6325 1. 2649 create a.

Komentar

Posting Komentar